Rich Contexts in Neural Machine Translation

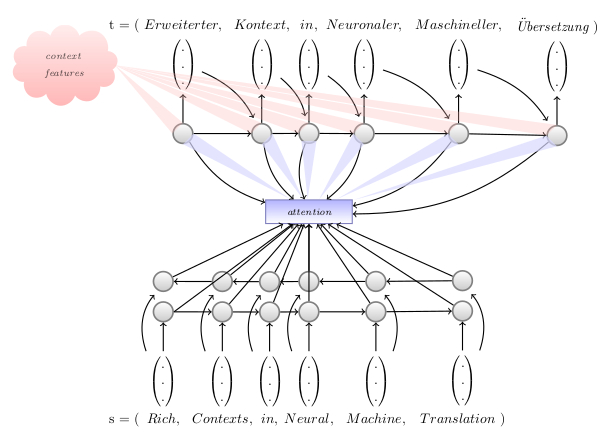

In the CONTRA project (Rich COntexts in Neural Machine TRAnslation) we conduct research on neural machine translation systems. This family of systems is called "neural" because models are built with neural networks, for instance recurrent neural nets.

Neural machine translation currently is the best performing and most widely used method for automatic translation. Key advantages over previous methods (so-called "statistical" models) are:

- Dependencies over long distances: A neural model allows parts of sentences that influence each other to be far apart. Example: a system that translates to German can learn that a separable verb prefix is sometimes placed at the very end of sentences.

- Naturalness and fluency of translations: neural translation generally results in more fluent and natural-sounding sentences in the target language.

- Arbitrary context: For translation, a neural system can consider not only an input sentence, but any arbitrary additional information as context, as long at it can be represented numerically.

In this project we explore the potential of additional context that a neural translation system can be conditioned on, such as

- Coreference annotations that help disambiguate and translate phenomena like pronouns

- syntactic structures

- multiple input languages

- document-level information

We will also train MT systems for the three major languages of Switzerland: French, German and Italian - using state-of-the-art methods. They will be available for free.

Project Head

Researchers

Rico Sennrich

This project is funded by the Swiss National Science Foundation under grant 105212_169888 and starts in January 2017.

Project Outputs

Find more information about our work on the Contra Project Outputs page.