Navigation auf uzh.ch

Navigation auf uzh.ch

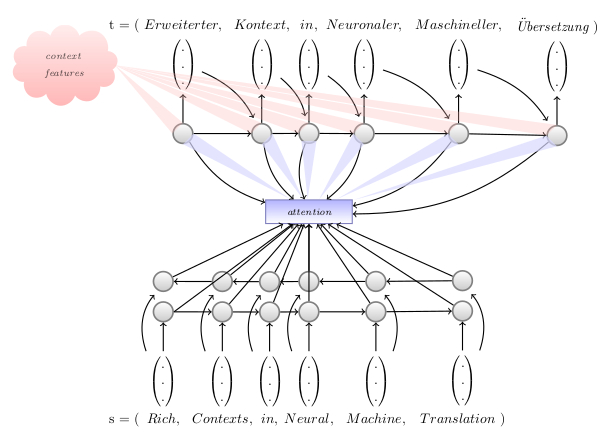

In the CONTRA project (Rich COntexts in Neural Machine TRAnslation) we conduct research on neural machine translation systems. This family of systems is called "neural" because models are built with neural networks, for instance recurrent neural nets.

Neural machine translation currently is the best performing and most widely used method for automatic translation. Key advantages over previous methods (so-called "statistical" models) are:

In this project we explore the potential of additional context that a neural translation system can be conditioned on, such as

We will also train MT systems for the three major languages of Switzerland: French, German and Italian - using state-of-the-art methods. They will be available for free.

This project is funded by the Swiss National Science Foundation under grant 105212_169888 and starts in January 2017.

Find more information about our work on the Contra Project Outputs page.